Artificial Intelligence (AI) voice generators have come a long way since the early days of rule-based text-to-speech (TTS) systems. These advanced technologies now leverage deep learning and neural networks to produce highly realistic and natural-sounding voices.

At the core of AI voice generators are complex algorithms and neural networks that analyze and process input from TTS technology. They break down the text into phonemes, syllables, and words, generating corresponding speech patterns that consider factors like intonation, stress, and pacing to create natural-sounding speech.

One key advantage of AI voice generators is their ability to learn and adapt. By training on vast amounts of human speech data, they continually refine their output, becoming more accurate and realistic.

Machine learning enables them to pick up on subtle nuances and variations in human speech, making the generated voices increasingly human-like.

Importance of AI voice generators in 2024

In 2024, AI voice generators will be crucial across various industries and applications. They enhance user experiences in virtual assistants and chatbots, making interactions more engaging.

AI-generated voices are widely used in audiobooks, podcasts, background music, and professional voiceovers already, enabling faster and more cost-effective production.

They also offer AI tools for personalized and immersive audio experiences in gaming, entertainment, and education. AI voice generators serve as valuable accessibility tools for individuals with

As demand for natural and engaging spoken audio content grows, AI voice generators have become essential in 2024, shaping communication and information consumption in the digital age.

The Evolution of AI Voice Generators

Early text-to-speech systems:

- AI voice generators began with early text-to-speech (TTS) systems

- Relied on rule-based approaches and concatenative synthesis

- Combined pre-recorded speech segments to generate spoken words

- It sounded robotic, lacked natural intonation, and had limited voice customization

- Despite limitations, it laid the foundation for future advancements

Advancements in deep learning and neural networks:

- Deep learning and neural networks have revolutionized AI voice generation

- Algorithms like recurrent neural networks (RNNs) and long short-term memory (LSTM) networks enable AI voice generators to learn and model complex speech patterns

- Training on vast voice data allows models to capture nuances of pronunciation, intonation, and emotion

- Generative adversarial networks (GANs) enhance the quality and naturalness of AI-generated voices

- Lead to highly realistic and human-like speech

- Improved control over voice characteristics and styles

- As deep learning techniques evolve, AI voice generators in 2024 offer unprecedented levels of naturalness, expressiveness, and customization

How AI Voice Generators Work in 2024

Data collection and preprocessing

AI voice generators need large amounts of voice data for training. This data comes from AI-generated voice sounds, audiobooks, podcasts, and human voiceover recordings.

The data is preprocessed to remove noise, normalize volume, and segment speech. Preprocessing ensures data quality and consistency for effective AI model training.

Training the AI model

1. Encoder-decoder architecture

AI voice generators use an encoder-decoder architecture. The encoder converts input from AI text-to-speech into a fixed-length representation own voice.

The decoder generates the corresponding speech waveform from this representation. This architecture helps the model learn the mapping between the natural AI voices, text to AI voices, text to voice generation time to speech technology, spoken audio, and speech.

2. Attention mechanisms

Attention mechanisms improve alignment between input text-to-speech software and generated speech. They help the model focus on relevant parts of AI text-to-speech in the input sequence, resulting in more accurate and natural-sounding speech output. Attention mechanisms are essential in state-of-the-art AI voice generators.

3. Generative adversarial networks (GANs)

GANs enhance the quality and naturalness of computer-generated voices and speech. They consist of a generator that produces speech samples and a discriminator that distinguishes between real and computer-generated voice samples.

The voice generator first tries to fool the discriminator by creating realistic speech, while the discriminator provides feedback to the voice generator to improve the natural-sounding voice generator's output. This adversarial training process leads to more human-like speech.

Synthesis and post-processing

After training, the AI model can generate speech from input audio files various file formats such as text to speech software. The synthesis process converts the model's output into an empty audio file or waveform.

Post-processing techniques, like signal processing and filtering, enhance ai-generated speaker's voice and sound for clarity and naturalness.

These voice cloning techniques remove artifacts, make voiceovers improve pronunciation, and add more human-like voices with appropriate pauses voice, and intonation. The result is ai tool for using voiceovers and cloning with a high-quality, human-like voice for various applications.

Key Features of AI Voice Generators in 2024

Realistic and natural-sounding voices

In 2024, AI voice generators produce highly realistic and natural-sounding voices for voice actors. The generated speech closely mimics human speech, with proper intonation, pacing, and pronunciation.

Deep learning and neural networks help AI models capture the subtle nuances of human speech. This realism enhances user experience and engagement.

Wide range of voice customization options

AI voice generators offer a wide range of various voice actor customization options. Users can choose from pre-built or custom voice-over templates custom voices that vary in age, gender, accent desired voice, and speaking style.

Advanced AI voice simulator models allow fine-grained, precise control over human-like voices, pitch, speed, and emotional range with just a few clicks of tone. This flexibility enables users to create personalized and unique voices for virtual assistant's own voiceovers, audiobooks, or custom voice-overs.

Multilingual support

AI voice generators in 2024 provide extensive multilingual support how many languages. They can generate speech in multiple languages and dialects, making them valuable for global communication and content creation. AI models accurately reproduce the unique features of each language.

Multilingual support expands the reach of AI-generated speech and enables localized content creation.

Emotional and expressive speech synthesis

AI voice generators can synthesize emotional and expressive speech. By modeling the emotional aspects of human speech, AI models generate different voices, that convey a range of emotions, such as happiness, sadness, anger, or excitement.

Expressive speech synthesis adds depth and authenticity to AI-generated voices. This capability is valuable in virtual assistants, entertainment, and gaming, where human-like interaction and expressive voiceovers enhance the experience.

Applications of AI Voice Generators in 2024

Virtual assistants and chatbots

- AI voice generators will be widely used in virtual assistants and chatbots

- These AI-powered tools provide natural and engaging interaction experiences

- Realistic and expressive AI-generated voices communicate information, answer questions, and provide support in a human-like way

- Enhances user satisfaction and makes interactions feel more personalized

Audiobooks and podcasts

- AI voice generators revolutionize audiobook and podcast production

- Publishers and content creators use AI-generated voices to quickly and cost-effectively produce high-quality audio content

- Enables the creation of audiobooks in multiple languages and accents, catering to a diverse global audience

- AI-generated voices are also used for podcast intros, ads, and other audio elements, saving time and resources

Voiceovers for videos and animations

- AI voice generators transform video and animation voiceovers

- Content creators, animators, and video producers use AI-generated voices for narration, character voices, and other audio elements

- Eliminates the need for expensive and time-consuming voice-acting sessions

- Customizable voice options allow creators to find the perfect voice for their projects

- Enables localization by generating voiceovers in different languages, making content accessible to a broader audience

Accessibility tools for the visually impaired

- AI voice generators improve accessibility for the visually impaired

- Convert written text to natural-sounding speech, enabling visually impaired individuals to access books, articles, and websites

- AI-generated voices can read aloud documents, emails, and other digital content, providing a seamless way for visually impaired users to consume information

- The emotional and expressive capabilities of AI voice generators make the listening experience more engaging and enjoyable, enhancing overall accessibility and inclusivity

Challenges and Ethical Considerations

Deepfakes and misuse of AI-generated voices

- Advancements in AI voice generation raise concerns about deep fakes and misuse

- Deepfakes are synthetic media created using AI, replacing a person's likeness or voice with someone else's

- Malicious actors can use AI-generated voices to create fake audio content

- Fake news

- Impersonations

- Fraudulent messages

- Fake audio content can deceive listeners and spread misinformation

- Ensuring proper use and consistent quality of AI voice generators becomes a crucial challenge

- Developing detection methods for fake audio content is essential

Privacy concerns related to voice data collection

AI voice generators require large amounts of human voice and data for training

This raises privacy concerns about:

- How voice data is collected

- How voice data is stored

- How voice data is used

- Individuals may not be aware that their voice call data is being collected

- Individuals may not consent to their voice data being used to train AI models

- Ensuring transparency is essential to address privacy concerns

- Obtaining proper consent is crucial

- Implementing strict data protection measures is necessary

Clear guidelines and regulations are needed to govern:

- The collection of voice data

- The use of voice data

Ensuring diversity and inclusivity in voice models

- Ensuring diversity and inclusivity in AI voice models is a significant challenge

If training data is biased or lacks diversity, AI-generated voices may not accurately represent:

- Different demographics

- Different accents

- Different speaking styles

Biased or non-diverse AI voice models can:

- Perpetuate stereotypes

- Exclude certain groups from the benefits of AI voice technology

- Efforts must be made to collect diverse voice data

- Developing inclusive AI models that cater to a wide range of users is crucial

Inclusive AI models should represent:

- Different genders

- Different ages

- Different ethnicities

- Different linguistic backgrounds

Diversity and inclusivity should be considered in both:

- Training data

- AI-generated custom voices

Future Prospects

Advancements in emotional intelligence and contextual understanding

The future of AI voice generators focuses on enhancing emotional intelligence and contextual understanding. More sophisticated AI models will allow voice generation to better grasp the emotional context and generate custom voices that accurately convey emotions.

This will lead to more natural and expressive AI-generated speech that adapts to different situations and user preferences.

Advancements in natural language processing will enable AI voice generators to understand complex queries, engage in human-like conversations, and provide personalized responses based on user context, preferred voice, and history.

Integration with other AI technologies

AI voice generators will integrate with other AI technologies for immersive experiences. Combining voice generation with computer vision, gesture recognition, and facial expression analysis will create interactive experiences.

Virtual characters in video games or VR can have AI-generated voices that sync with facial expressions and body language, creating believable interactions.

Integration of the voice generator takeovers with machine translation will enable real-time voice translation, breaking down language barriers.

Combining AI voice generators with sentiment analysis and recommendation systems will open up possibilities for personalized content creation and delivery.

What industries benefit most from AI voice technology?

AI voice technology has the potential to benefit a wide range of industries. Some of the industries that can benefit the most from AI voice technology include:

1. Entertainment and media:

- Audiobook and podcast production

- Voiceovers for movies, TV shows, and animations

- Localization of content for international audiences

- Personalized audio experiences in gaming and interactive media

2. Customer service and support:

- Virtual assistants and chatbots for customer inquiries

- Automated phone support systems

- Personalized and multi-lingual customer interactions

- Improved accessibility for customers with hearing impairments

3. Education and e-learning:

- Personalized learning experiences with AI-generated voices

- Accessible educational content for students with visual impairments

- Language learning applications with realistic pronunciation

- Automated grading and feedback systems

4. Healthcare and wellness:

- Voice-based assistants for patients and healthcare providers

- Accessible health information for individuals with reading difficulties

- Telemedicine and remote patient monitoring

- Voice-guided meditation and therapy applications

5. Automotive and transportation:

- In-vehicle voice assistants for navigation and control

- Voice-based interfaces for public transportation systems

- Accessible travel information for visually impaired individuals

- Voice-activated parking and toll payment systems

6. Marketing and advertising:

- Personalized and engaging audio advertisements

- Voice-based surveys and customer feedback collection

- Localized ad campaigns for international markets

- Voice-activated promotions and discounts

7. Telecommunications:

- Voice-based authentication and security systems

- Automated voice messaging and notification services

- Voice-controlled smart home devices and appliances

- Accessible communication tools for individuals with disabilities

8. Finance and banking:

- Voice-based banking and financial management

- Fraud detection and prevention through voice analysis

- Accessible financial services for visually impaired customers

- Voice-activated trading and investment platforms

These industries can leverage AI voice technology to enhance customer experiences, improve accessibility, streamline operations, and create innovative products and services.

As AI voice technology continues to advance, its applications and benefits will likely expand to even more industries in the future.

Conclusion

AI voice generators have come a long way since the early days of rule-based text-to-speech (TTS) systems. Advancements in deep learning and neural networks have enabled these technologies to produce highly realistic and natural-sounding AI voices.

They now offer customizable voices, support multiple languages, and can generate emotional and expressive speech through techniques like attention mechanisms and generative adversarial networks (GANs). Advanced AI voices have become increasingly common in virtual assistants, audiobooks, professional audio projects, voiceovers, and accessibility tools.

The most advanced AI voice generators can be found in YouTube videos, Google Play Books, and other fine-tuned text-to-speech technology. However, challenges like potential misuse (e.g., deepfakes), privacy concerns related to voice data collection, and the need for diversity and inclusivity in voice models remain.

Looking ahead, AI voice generators will transform various industries and shape how we interact with technology. As they advance in natural language processing (NLP) capabilities, such as emotional intelligence and contextual understanding, they will enable more human-like experiences.

Integration with other AI technologies, including computer vision, gesture recognition, and machine translation, will create immersive and interactive applications, revolutionizing entertainment, gaming, and virtual reality. AI voice generators will also break down language barriers and facilitate global communication.

Beyond 2024, AI voice generators have immense potential to transform content creation, personalization, and accessibility. By addressing challenges and ethical considerations, we can harness AI voice generation to create a more inclusive and connected world.

FAQs

How real does an AI-generated voice sound?

As of 2024, AI-generated voices have achieved a remarkable level of realism, making them sound incredibly human-like.

Advancements in deep learning, neural networks, and speech synthesis have enabled AI voice generators to accurately mimic various aspects of human speech, including natural intonation, emotional nuances, and linguistic characteristics across AI voices, languages, and accents.

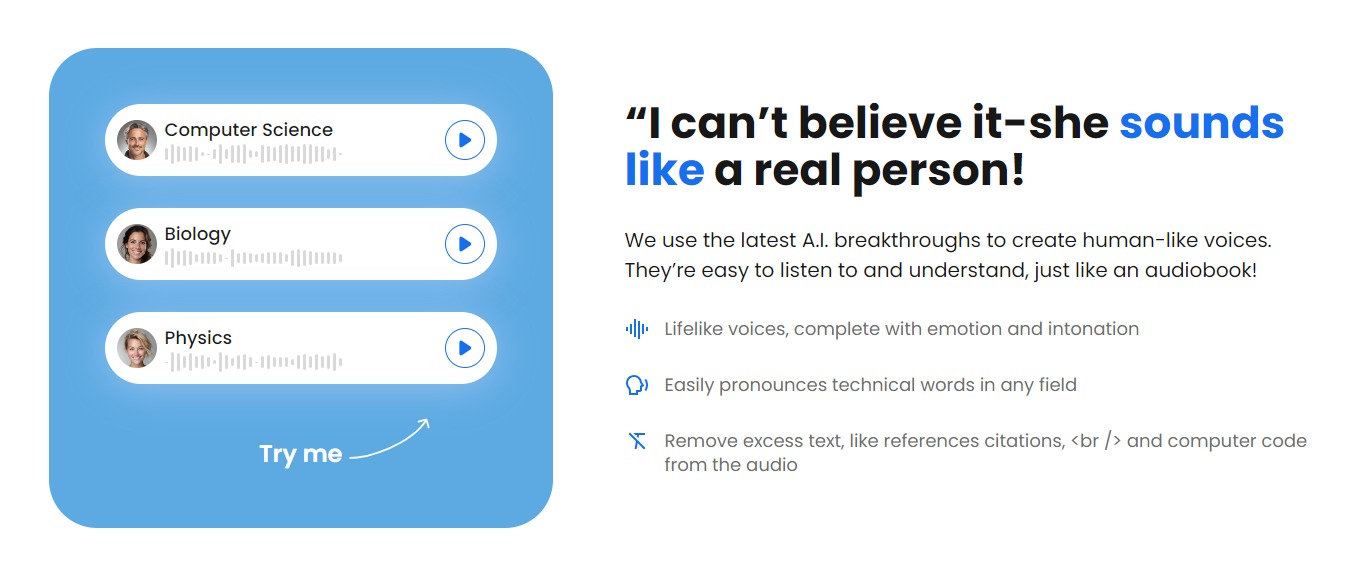

What is the AI tool that reads text aloud?

An AI tool that reads text aloud is a software application that uses artificial intelligence and text-to-speech (TTS) technology to convert written text into ultra-realistic voices.

These speech tools analyze the input text and generate lifelike voices, allowing users to listen to the content instead of reading it.

One such AI tool is Listening.com, a platform that offers best ai voice generator and advanced TTS capabilities. This powerful text-to-speech app utilizes cutting-edge AI and machine learning algorithms to create highly realistic and natural-sounding voices.